Hello,

We start playing with the BikePoint api.

When we try to count number of bikes each 5 minutes, we had sometime “incorrect” data (maybe cached ?)

I tried to debug with curl and sometime, the API return strange headers

date: Mon, 15 Mar 2021 08:43:45 GMT

content-type: application/json; charset=utf-8

cache-control: No-Cache, s-maxage=604800.000

via: 1.1 varnish

age: 125894

access-control-allow-headers: Content-Type

access-control-allow-methods: GET,POST,PUT,DELETE,OPTIONS

access-control-allow-origin: *

api-entity-payload: Place

x-backend: api

x-cache: HIT

x-cache-hits: 2200

x-cacheable: Yes. Cacheable

x-frame-options: deny

x-no-smaxage: true

x-proxy-connection: unset

x-ttl: 604800.000

x-ttl-rule: 3

x-varnish: 228692015 218832366

x-aspnet-version: 4.0.30319

x-operation: BikePoint_GetAll

x-api: BikePoint

cf-cache-status: DYNAMIC

cf-request-id: 08d6a7495f0000fa80d9ac5000000001

expect-ct: max-age=604800, report-uri="https://report-uri.cloudflare.com/cdn-cgi/beacon/expect-ct"

server: cloudflare

cf-ray: 630474bbc982fa80-AMS

See the age and x-ttl value.

Most of the times, values are less than 60 and 60 for x-ttl.

eg:

date: Mon, 15 Mar 2021 08:56:26 GMT

content-type: application/json; charset=utf-8

cache-control: public, must-revalidate, max-age=30, s-maxage=60

via: 1.1 varnish

age: 0

access-control-allow-headers: Content-Type

access-control-allow-methods: GET,POST,PUT,DELETE,OPTIONS

access-control-allow-origin: *

api-entity-payload: Place

x-backend: api

x-cache: MISS

x-cacheable: Yes. Cacheable

x-frame-options: deny

x-proxy-connection: unset

x-ttl: 60.000

x-ttl-rule: 0

x-varnish: 1644999551

x-aspnet-version: 4.0.30319

x-operation: BikePoint_GetAll

x-api: BikePoint

cf-cache-status: DYNAMIC

cf-request-id: 08d6b2e00900004c62f583b000000001

expect-ct: max-age=604800, report-uri="https://report-uri.cloudflare.com/cdn-cgi/beacon/expect-ct"

server: cloudflare

cf-ray: 630487467c734c62-AMS

what I understand is the API return old data ? Why and how to fix it ?

Thanks

Hello @briantist

what I understand is that there is cloudflare + varnish.

I’m pretty sure something troubles.

I tried with Cache-Control: no-cache on client side (still curl) without success.

If you look at data when they seem to be old (ago header > 60), filter on the AdditionalProperties.modified (json path $[*].additionalProperties[0].modified), most recent date are old (today minus ago header).

The probleme, it is not a question of seconds or minutes but days !

@PierrickP

It’s often possible to get around this problem with TfL API requests to add in another “dummy” get parameter. For example “&cache-bypass=1615819430202” (current unix time in milliseconds). I know this has been a useful workaround for another TfL dataset.

@briantist already tried, sadly the endpoint return 404 if we add query strings

I guess you could use the call to get a list of all the BikePoint IDs and then use your 500-calls a minute to round robin them with a call to all of the https://api.tfl.gov.uk/BikePoint/BikePoints_303 all the way up to https://api.tfl.gov.uk/Place/BikePoints_715

It not elegant but it might get past the problem you have.

Thank you! this is a possible solution but not really applicable (nor elegant indeed  ).

).

There are 790 stations. We would exceed the 500 reqs/mins limit.

It’s a pity to spend 2 minutes to get a data that we could have in one request

I imagine that other reusers have the same problem? It’s still a serious concern.

Are there people who manage the api on the forum?

@PierrickP

Yes, there are 790 stations, so that’s about a minute and a half of calls. I’m presuming you’re doing this with a cron so that’s just a loop and some usleeps() to make to you don’t hit the rate limit.

The main issue you have here is that the provision of the data is “as is”. If you want to use it, you have to deal with what you have.

@jamesevans may be able to provide some additional support, but it’s probably easier to work around!

1 Like

Small update

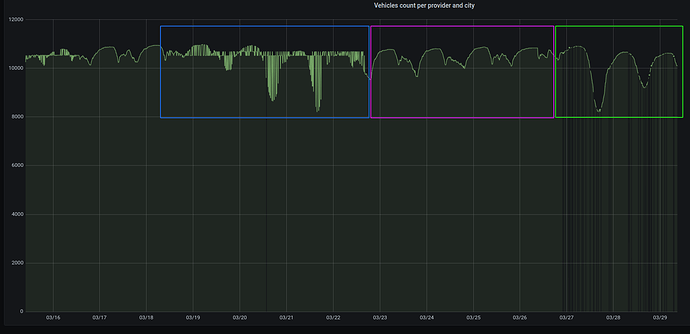

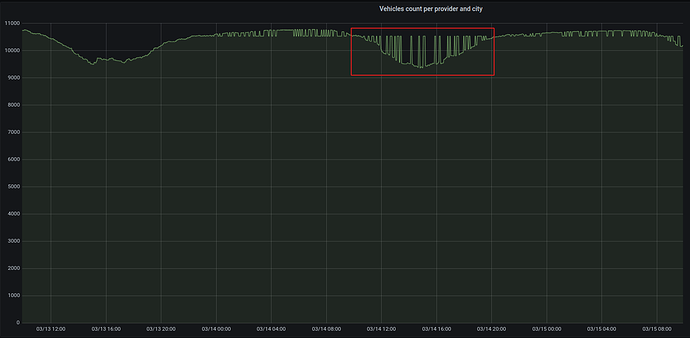

There are good days and some bad days.

On blue, it’s multiple days with wrong anwser (maybe 1/3 or 1/2).

Followed with purple square. Always good.

On green square, i filtered anwser with header age > 60. (We do a retry 3 times)

We can see, it’s a bad day but with this filter we avoid incorrect value (better to not have value)

1 Like

).

).