James

This evening there are two separate sets of WTTs in the Data Bucket, though the second set of links (with “pub” included in the path) do not work.

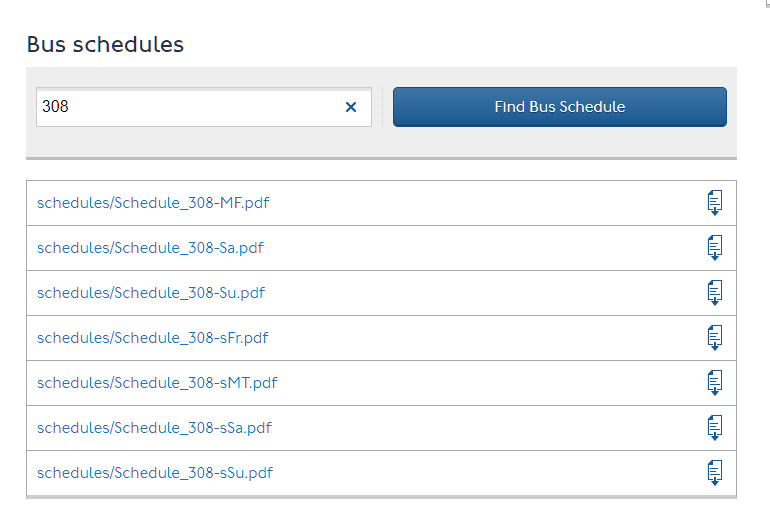

So, taking the set that does work, mostly carrying the date of 13th January, how does it look?

Not tidy. Not lush. I am afraid the only word I can use is “grim”. Outdated schedules have crept in for an alarmingly large number of routes. List (1) below is where all or most of the current WTTs have been overwritten. List (2) shows those where some (but not mpst) curretn WTTs have been overwritten. List (3) is similar but the wrong version for the right SCN is now present.

I am struggling as to how this can come about and quite how overwriting with test data can be the cause but if that is what you are being told I suppose it must be right.

I can identify dodgy files very quickly after downloading with relatively unsophisticated techniques. The only slightly clever thing I have to do is access the title property (which includes the Service Change Number) of each file and check that the title does not match anything that had previously been loaded. I can see that a check of that nature is not much good if the publication process is not faithfully picking up a new and correctly created set of files.

Oh, and there has been no improvement for the routes for which WTTs are missing altogether.

(1) All (or at any rate most) schedules incorrectly overwritten.

You will note that some of these are serial offenders.

17

25U

92

96

105

140

186

216

224

225

238U

280

308

394

412

440

453

533

697

698

E6

H10

H32

H98

K1

W16

N8

N109

N307

N453

RB1

RB2

RB4

RB5

RB6

RB6A

UL7

UL8

UL16

UL79

UL80

(2) one or two schedules incorrectly overwritten

3 (Ce)

12 (sMT)

14 (sMT)

47 (sFr sMT)

183 (sTh)

192 (MF)

229 (MFSc)

281 (sMF)

343 (sMT)

349 (Fr MT)

N12 (sMTNt)

N14 (sSuNt)

N18 (sMTNt)

N36 (sMTNt sMFNt sSuNt)

N37 (sMTNt)

N148 (sSaNt)

N155 (sSaNt)

N281 (sSaNt)

N285 (sSaNt)

N343 (sMTNt)

(3) Wrong version has overwritten later version (for same SCN)

8 (sSa)

15 (sSa)

18 (sSa)

88 (sSa)

113 (Ce)

345 (Ce)

N14 (sMTNt)

N15 (sMTNt)

N18 (sSaNt)

N26 (sSaNt)

N35 (sMTNt)

N44 (sMTNt)

N47 (sFrNt sMTNt)

N57 (sMTNt)

N72 (sSaNt)

N105 (sSaNt)

N213 (sMTNt)

N296 (sFrNt)

N365 (sSuNt)

Michael